The Blueprint Programming Model

A fundamental feature of Blueprint is its orthogonal programming model which factorizes application logic into two distinct domains; infrastructure and processing. The infrastructure component (a superset of coordination) can be thought of as a concurrent schedule with a number of responsibilities;

It must ensure that the processing functions are executed in a legitimate order (calculation dependencies must be observed)

Data must be delivered to the right place at the right time, and in particular component interfaces must be prototyped and validated (data types, quality of service etc)

Automatic (transient) data must persist until it is no longer referenced, and then its space returned for re-allocation

These objectives must be achieved in a thread-safe manner (data races, deadlocks etc must be avoided)

Another way to think of this partition is that processing determines what is executed whilst infrastructure determines how and when it is executed. In practice, this means that CDL replaces thread synchronization, messaging and other ad hoc interfacing with a single unified paradigm, referred to as connective logic, which explicitly addresses timing issues such as deadlocks, race conditions and priority inversions in a localized repeatable manner.

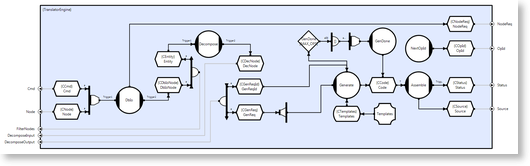

This means coding can be specialized and naturally leads to a hybridized language approach where processing logic is expressed textually (C++, C#, Java etc), and infrastructure logic can be expressed graphically (CDL). This is similar to digital electronics descriptions where some aspects of the logic are most easily expressed in text (e.g. VHDL), and others in schematics (circuitry). In fact CDL diagrams are usually referred to as circuits.

The diagram above shows a circuit, which is actually part of the CDL translator. The circular objects (circuit methods) are repositories for processing code, and these are scheduled by the runtime in accordance with the constraints imposed by the encompassing CDL circuitry. This highlights a fundamental property of CLIP programming, which is that infrastructure is considered macroscopic and invokes processing (which is considered microscopic). This contrasts with more typical concurrent programming models where semaphores, locks, sockets etc. are typically invoked from within the processing layer. In the former case infrastructure is localized to a contiguous description, whereas in the latter case, the equivalent logic is fragmented and dispersed (obscuring visibility).

Orthogonal Programming

CLiPs infrastructure oriented programming model corresponds conceptually with mainstream design methodologies where infrastructure is considered macroscopic and most often represented in terms of its connectivity (boxes and lines). Anecdotal evidence suggests that many engineers given a white board and a marker pen and tasked with providing a top level description of their system will draw pictures, rather than write words. The same engineers tasked with describing a processing task such as an arithmetic computation however, would probably find this easier to do using a textual description which would usually involve looping and branching mechanisms, as well as various logical and mathematical operations. The separation in treatment of infrastructure and processing appears to be intuitive.

A lot of macroscopic description that is currently captured by UML architects as a means of directing programmers has direct CDL equivalence. This has enabled architects, who generally traffic in diagrams as a matter of course, to bypass programmer translation and code their infrastructures directly. This enables application specialists with limited conventional programming experience, but a detailed understanding of the required algorithms (and their potential concurrency), to more precisely realize their intentions. Architects working on the UKs Surface Ship Torpedo Defense (SSTD) system for example, recently accounted for around 30% of the final delivered object code (compiled from their translated CDL), and their engineers, for the large part anyway, were unaffected by the concurrent nature of the project.

A principal requirement for CDL was the incorporation of all infrastructural mechanisms into a single unified machine translatable symbolism that could be used interoperably with standard CDL-independent processing code. This means that legacy processing code does not need to be modified for concurrent (multi-core) execution, and most engineers can continue to work in a sequential programming environment with familiar languages and tools. Because orthogonalized processing code does not 'call' down into infrastructure, it cannot assume it, and this reduces lock-in, which maximizes portability and re-use of processing code.

When infrastructure and processing are fully separated (at the file level), it generally seems to become apparent that infrastructural aspects tend to be platform specific, whilst most processing appears to be largely portable. This suggests that the processing abstractions provided by today's high level languages are generally good, but their treatment of infrastructure requires further abstraction; CLIP provides platform independent infrastructure.

Visual Programming

Once infrastructure and processing are factorized, it would seem reasonable to question whether or not the concurrent nature of the former can be most intuitively captured by sequential text. Although we have no scientific evidence to substantiate this claim, it does seem that brains find it easier to process a concurrent world visually than they do linguistically; this might not be too surprising because in the former case we usually need to understand an image in more or less any order, but in the latter case word order is crucial to meaning and therefore intrinsic.

The growing popularity of the UML would suggest that given the choice, there are some aspects of system behavior at least, that engineers generally prefer to describe schematically. Our contention is that coordination logic falls into this category. CDL therefore provides users with the choice, but it is worth noting that the symbolism was considered first, and the textual API developed to reflect it, rather than a symbolism being developed to represent a primarily textual description. It is also worth pointing again that of course there are many other aspects of software which are far better described by words; matrix inversion for example is difficult to picture.

There are also a number of precedents to consider in other engineering disciplines. Because most branches of engineering deal with physical objects like ships, buildings and planes; as opposed to logical abstractions like ones and zeros in computer memory, there is a high level of correspondence between the models, designs, and implementations. At a macroscopic level, the shuttle models, the shuttle blueprints, and the shuttles themselves have an ongoing resemblance thats fairly simple to see. We would argue that the success of GUI designers reflects just such a correspondence in that branch of software. Dragging and dropping buttons onto a screen is a lot more intuitive than creating the textual description that the designer tool subsequently generates for you for free (its immediately obvious if something is missing, or in the wrong place).

Without exception, all early adopters of CLIP have chosen to develop their applications using the CDL symbolism, and then translate these circuits to text. For interest, the first circuits were drawn by Ultra Electronics in 1993 for a range of high performance digital sidescan sonar, and whilst the symbolism has matured considerably since then, they contain many of the fundamental concepts of the final language.

Frameworks versus Glue

It must also be worth asking why so many companies are prepared to invest almost as much time developing UML models as they do cutting code; particularly when the volume of code that the models themselves generate is generally fairly small. Anecdotal evidence to date suggests that architects and engineers are comfortable with the concept of a visible framework which they can then incrementally populate with detail. This is analogous to a hull or an airframe, and is conceptually tangible.

What engineers dont seem to like is the concept of glue; this probably reflects the fact that localized logic is far easier to consider than dispersed logic. If we need to start searching our code for semaphore or mutex handles then we are probably more likely to overlook some small detail that results in a deadlock or a race condition. The fact that architects have been able to think in terms of frameworks, but programmers have been equipped with languages that provide glue, leads to an annoying discontinuity in the development process. We would argue that applications have structure in exactly the same way that aircraft have airframes, but because of the way that we implement them, we often cannot see the wood for the trees.

Because of their orthogonality, coordination languages have the potential to localize infrastructure descriptions. CDL circuitry replaces the notion of glue with the notion of a framework and takes this one stage further by allowing engineers to create a fully executable framework, which does not need the details of data or processing in order to run.

This has led early adopters toward a slightly different engineering process referred to as the 'infrastructure oriented lifecycle', and in essence; infrastructure in the form of circuitry is designed and implemented first. This is in contrast to what might be called the component oriented lifecycle, where processing components are identified first and then interfaced (glued) at a later stage of the lifecycle. Conceptually, the Blueprint approach is more akin to the notion of an airframe, chassis or hull, and the main stage of the engineering process becomes one of population (fitting out). This has the added benefit, that if race conditions and other timing related problems do exist they can be located and remedied without the added complexity of processing logic, and hence at an earlier stage of the lifecycle.

Lockless Programming Models

In many cases, scheduling can be used as an alternative to locking. Note that whilst STM is described as a lockless technology, it is still based on the notion of exclusion (albeit non-blocking), but in this case, conflicts can be discovered, rolled-back and recovered. STM is applicable to intrinsically concurrent applications, but we will argue that it may not be necessary for a significant subset of multi-core migration.

It is tempting to assume that locks are a necessary evil for all concurrent (multi-threaded and/or multi-process) programming. In fact this is very often not the case at all. There is a very important distinction between applications which are intrinsically concurrent (they involve timing dependencies such as those associated with asynchronous I/O), and those which have a purely sequential description (but require parallelization). In general, the latter will execute repeatably, but use concurrency purely as a means to improve performance. A sequential component that executes repeatably is referred to as schedulable.

Although a full discussion is beyond the scope of this article, most locking is gratuitous in the case of schedulable components, and can be replaced by a composable set of event operators (multiplexors, distributors and collectors). Obviously, the CORE runtime implementation of these operators makes extensive use of locking, but this is transparent to CDL application programmers who do not need to be concerned with these details.

In practice many applications which do involve asynchronous I/O (e.g. HCIs or sockets) can be fairly straightforwardly factorized as a set of schedulable components with non-deterministic interactions (most processing code turns out to be deterministic even if its scheduling is not).

The reason that this distinction is salient is because the arrival of multi-core is going to mean that a lot of sequential components are going to need to be parallelized in order to take advantage of this technology advancement, and almost all of this can potentially happen without the need for resorting to the non-composable, impenetrable world of locks, semaphores, monitors and critical sections; all major sources of deadlocks, logjams and races.

Most programmers agree that the use of static data is usually gratuitous in the sense that it is a convenient but dubious alternative to passing data as function-call arguments. Object state is exactly the same, and in deterministic calculations, it will always be addressed in the order determined by a given input.

Given that schedulable components can be reconfigured to manage all data by argument passing, then the potential concurrency is therefore determined by calculation dependencies (the order that the calculation must proceed in). In the case of commutative operations where we only care about exclusion, and do not care about order, we simply need to ensure that the operations are scheduled exclusively (or are thread-safe). In practice, repeatable computations are mostly composed from non-commutative operations (order matters), and so the use of locks is insufficient anyway (hence the frequent need for semaphores).

If we think of the problem in terms of schedules rather than locks, then we can localize our logic into a single contiguous composable description with the obvious advantage of modularity (CDL circuitry). But just as coarse grain locking will severely limit the concurrency that we can achieve, so will coarse grain scheduling. The benefit of the latter however, is that it is very easy to incrementally refine scheduling granularity because changes are localized (circuitry is nestable). By contrast, it is extremely difficult to incrementally refine locking granularity because the required changes are generally dispersed. This means that granularity is no longer limited by complexity, but now becomes limited only by scheduling overhead. For this reason, the CDL runtime replaces thread context switching with a lighter weight alternative (threads only block when there is no available work).

The good news is that most of the time we are actually dealing with schedulable components and therefore we can address the problem using a scheduling approach and limit locking to the relatively small subset of functionality that deals with interfaces that exist between asynchronously executing schedulable components (e.g. a GUI and its back end processing).

Whilst CLIP is generic in the sense that it addresses both intrinsic and extrinsic concurrency (but with arbitrated stores replacing raw locks), it specifically provides a means of factoring out the latter, resulting in considerable simplification, and enormous potential for automation of the migration process. This approach has now been successfully demonstrated for a broad range of intrinsically and extrinsically concurrent applications.

An obvious example of this approach is CLiPs own toolset. The host IDE has a complex GUI component and is therefore intrinsically concurrent, but the backend processing (translation, analysis, etc.) is necessarily schedulable.

Click to enlarge

Click to enlarge