Description

Reentrancy determines the number of events that an object can simultaneously hold open, and this can be used to liberate potential circuit concurrency. Its precise action is object specific. Typically, object reentrancy is set to the number of CPUs available to the executing process, which allows all worker threads to be kept busy.

The sections below describe the ramification of object concurrency on an object by object basis, but the examples that follow are probably the best way to understand the application of multiple reentrancy.

Collectors

The concurrency of a collector determines the number of consumers that can simultaneously 'open' its collected events. In order for the collector's reentrancy to liberate extra concurrency, all collected objects must have a reentrancy that is equal to, or greater than, that provided by the collector. Likewise, the collector's consumers must also have equal reentrancy or they will not be able to benefit from the collector's reentrancy. If a collector has a reentrancy of 'N' for example, then up to 'N' method instances could simultaneously open the same collector element, and each would have their own unique collection of data. The method instances could 'close' the collector in any order.

One important property of sequential collector reentrancy is that collection sequences cannot overtake earlier collections that are still in progress. If for example a collector were collecting from two transient stores and the collector's first consumer had collected a buffer from the first store, but was waiting for a buffer from the second store; then this would mean that the second collection could only progress to collection from the first store. When the second store subsequently receives an event, then it is guaranteed to be given to the first collection. What this means in practice is that sequential collections complete in the order that they start. Note however, that collector events can be closed in any order. Again, reentrancy is different from dimensionality, and there is no restriction on the order with which each adjacent collector element can progress its collection.

Competers

The reentrancy of a competer determines the number of consumers that can simultaneously 'open' its provided events. As with all other cases, the competer's reentrancy can only be realized if the object that it consumes from can provide an equal level of reentrancy.

Distributors

Distributor reentrancy is similar to collector and competer reentrancy in that it determines the number of consumers that can simultaneously open events that are provided to the distributor, but in this case, it provides a notion of 'run-ahead'.

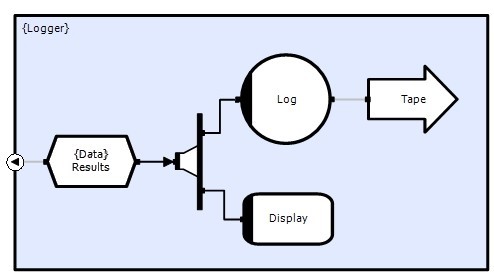

Suppose for example that an application produces a periodic data stream that is distributed to two separate processing chains, that are able to execute in parallel (see example below). For the purpose of the example, assume that the first chain eventually provides a stream of display data that needs to update in real-time, and that the second sends the same distributor input to a logging device that caches data and periodically writes to tape. In this case, we don't want the display chain to be 'held-up' by the transient delays caused by the tape device periodically writing buffered data because this could cause screen jitter. If we give the distributor a reentrancy of 'N' then this will allow the high priority real-time chain to run up to 'N' events ahead of the low priority output chain, and provided 'N' is large enough, the display will not be affected by the transient delays caused by the logging device; which 'catches-up' on the intermediate iterations that don't write to the device. This technique is referred to as 'de-coupling' and is frequently required by real-time data acquisition systems.

Multiplexors

Multiplexors have an implicit reentrancy that derives from the fact that each link on the consuming face can be simultaneously 'open'. However, each link can also have an additional reentrancy, and this determines the number of consumers that can simultaneously open a given link. This can be necessary for optimal concurrency in some cases.

Demultiplexors

Demultiplexer reentrancy determines the number of consumers that can simultaneously open events on a given providing link. Note that this can only be used to liberate concurrency if the corresponding multiplexer link has at least the same reentrancy.

Reducers

Reducer reentrancy determines the number of consumers that can simultaneously open events that are provided by the reducer.

Repeaters

Repeater reentrancy determines the number of consumers that can simultaneously open events that are provided by the repeater.

Splitters

Splitter reentrancy determines the number of consumers that can simultaneously open a given splitter link. Note that in order for this reentrancy to liberate concurrency, the corresponding collector must have at least the same reentrancy (and so must all the event propogators in between).

Compound events consumed by the splitter are not closed until all providing links have closed, and so reentrancy is required by splitters that need to decouple their consumers (see distributor 'run-ahead' above). Events are delivered to the splitter's output links in the order that they are consumed by the splitter.

Transient Stores

Transient store reentrancy is determined by buffer depth. This determines the total number of buffers than can be simultaneously opened for read and/or write operations. Typically, stores are double-buffered which allows one thread to read the previous iteration, whilst another is writing the next iteration in parallel.

Arbitrated Stores

Arbitrated stores do not provide transient events because reads are non-destructive. They do not therefore have a 'reentrancy' attribute.

Methods

Method reentrancy determines the concurrency of a given method instance. If the method has persistent state then this is usually 1, but if it is stateless, it is usually set to the number of CPUs available to the executing process (providing optimal concurrency).

CDL does provide circuit scope locks that allow stateful methods to be safely reentered, but in practice these are seldom necessary. If they are required, then they are typically created within method and/or circuit workspace.

Examples

In the example above, a transient store event stream is distributed to a data logger, and display call-back. In practice, the logger device is likely to use a cache mechanism and will therefore experience transient loads. The display call-back on the other hand will execute in the GUI thread and is therefore required to execute in a far more timely manner (to avoid 'jitter'). In order for the display to update in a timely manner, it needs to be de-coupled from the logger, and the distributor's reentrancy determines the permissible event advance/lag that the two consuming chains can experience without affecting the other. The larger the reentrancy, the less coupled the chains are, but the more buffers that the store needs to provide, and hence the larger the memory requirement.